|

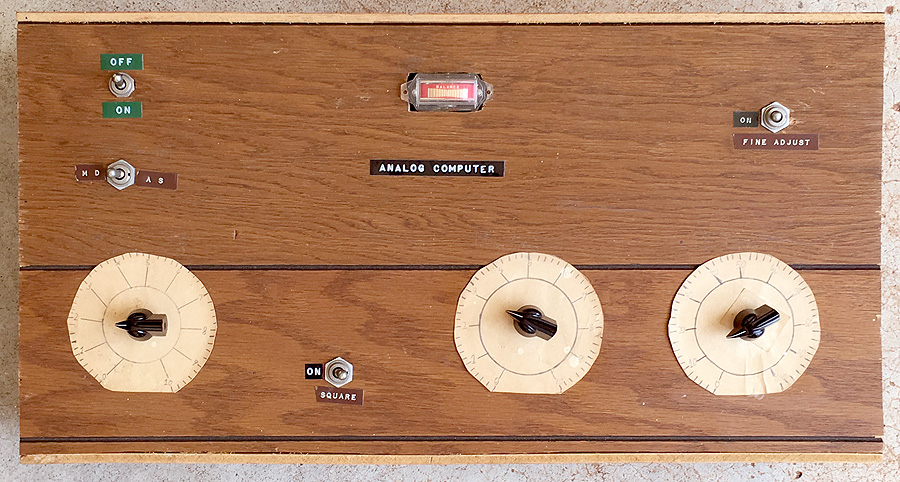

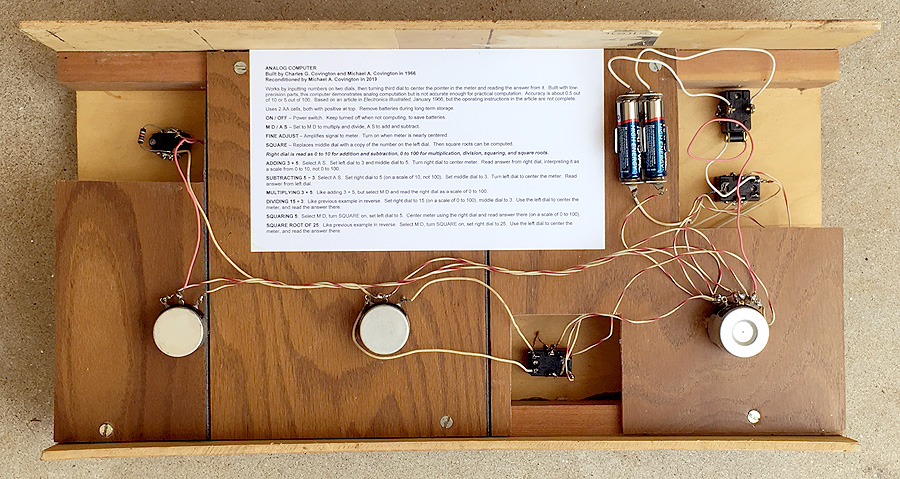

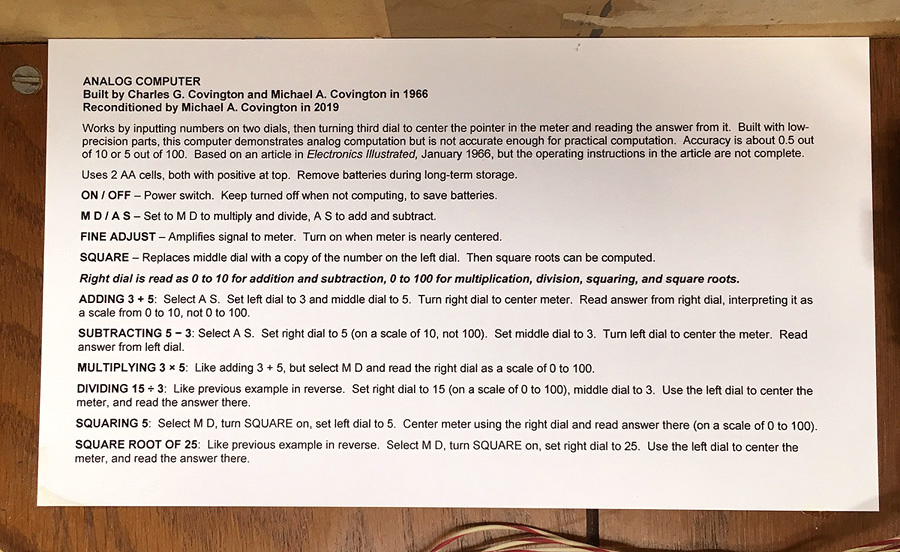

A sketch of the history of amateur astronomy

After a long lapse, just like London buses, here come three or four Notebook

entries at once. I'm getting caught up!

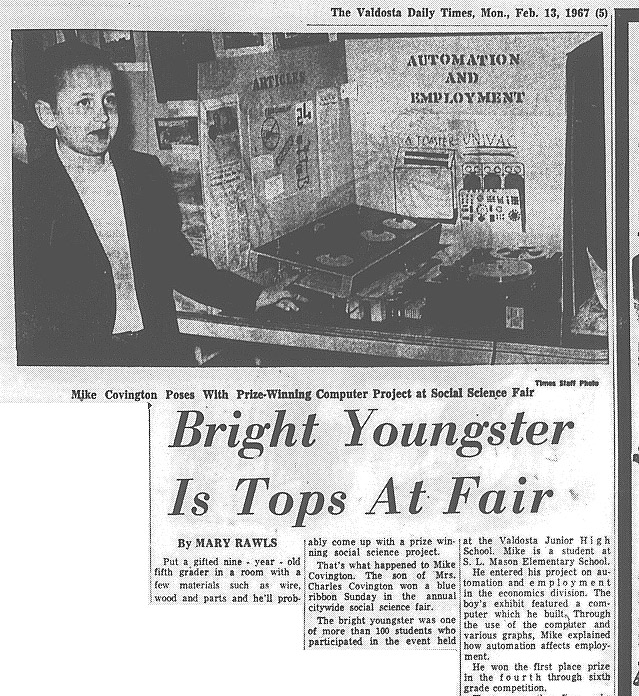

Last night I gave a talk to the Atlanta Astronomy Club

at the Fernbank Science Center in a room that used to be their

library, but has been converted into a multi-purpose room with just a few books.

(Sad, but if they hadn't been keeping up the library, it must have become almost

useless in 50 years. I first visited it in October 1968.)

The topic was the history of amateur astronomy, a subject I've never seen

anyone survey. I've seen lots of details about particular astronomers and organizations,

but not the big picture. In what follows I'm going to give you a very short summary

of my talk.

I divide history into five periods.

But there are no walls on the borders between periods, and some of the events

typical of each period are just outside its borders.

(1) Victorian England (1837-1901). Amateur astronomy worldwide has deep roots in

the England of Queen Victoria's era. Most present-day serious amateurs have read at

least one Victorian astronomy book.

This is the period when both professional and amateur astronomy became established

as distinct pursuits. Academic astronomy became professionalized, with the establishment

of organizations, journals, and professorships; it was no longer a part-time specialty

for mathematicians or the like. And two kinds of amateur astronomy emerged.

The first kind comprised "grand amateurs" as

Chapman

calls them, well-to-do people who built observatories at their own expense

(sometimes even hiring observers) and became serious unsalaried scientists.

The first prominent one was probably Admiral W. H. Smyth,

whose deep-sky observing guide

is still in print; in its time, it was serious original science.

Another was

Isaac Roberts, pioneer astrophotographer.

And the last and greatest was Percival Lowell, American, founder of Lowell Observatory.

The second kind comprised people who used

"common telescopes" (affordable small refractors) as encouraged by

Rev. T. W. Webb's Celestial Objects for Common Telescopes,

another book that is still in print and widely used.

Crucially, in his introduction, Webb encouraged people to appreciate the sky as a form of

self-improvement, and to better appreciate God's creation, even if there was no

possibility of contributing to science.

That was the manifesto for amateur astronomy: it is good to learn about the sky and enjoy

looking at it, just for its own sake.

(2) The early 20th Century (1901-1957).

This is the period when amateur astronomy grew, formed organizations, spun off

amateur telescope making as a sub-hobby, and (to a considerable extent) moved the

center of innovative activity to the American Northeast.

The British Astronomical Association, for amateurs as distinct from professionals,

dates from 1890 (the British are often ahead of their time).

Other organizational

developments include the AAVSO, the Springfield Telescope Makers and Stellafane,

the Scientific American amateur science column and telescope-making books,

Sky and Telescope, and the ALPO.

Meanwhile, professional astronomy became radically different from amateur astronomy.

Professional were discovering the nature of galaxies and the expansion of the universe,

using the largest possible telescopes (Mt. Wilson and Palomar).

Amateurs continued the Victorians' interest in lunar and planetary observing.

(3) The Space Race (1957-1969).

The time between Sputnik and Apollo 11 was a time of great enthusiasm for astronomy

and for science in general; increased funding for astronomy education; proliferation of

college observatories and planetariums; but uncertainty about the future.

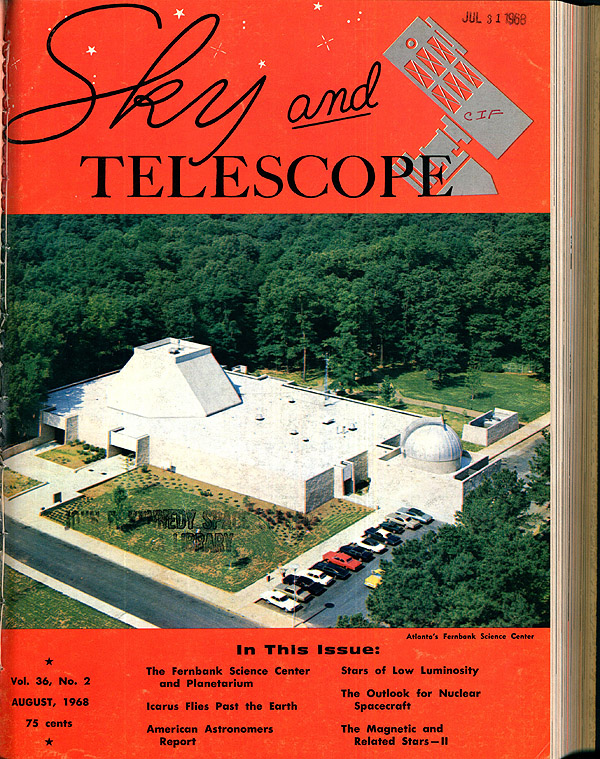

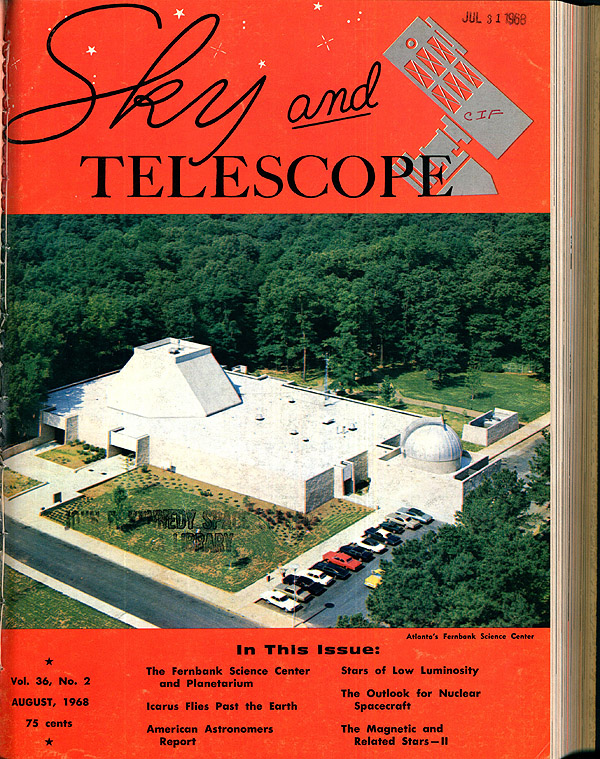

Here is the Fernbank Science Center, where I gave my talk, very much a product of that era:

Notice that Sky and Telescope was, in those days, a magazine for professional

astronomers and educators as well as amateurs. It was rarely or never sold on newsstands.

It mostly kept people together who were already connected.

Outsiders found out about amateur astronomy through Scientific American and through

the advertisements of Edmund Scientific Company, which appeared in general-interest

magazines such as Popular Mechanics. Amateur astronomy broke upon me through

Edmund's Catalog 645.

One good thing is that planetary science suddenly came back to life — professionals

started taking an interest in the moon and planets (and soliciting data from amateurs,

who had never given them up).

Amateur astronomy was thriving, but there was uncertainty.

Was "space" the same thing as astronomy?

(It's a long way from rockets to quasars, and it's always going to be a long way,

and many people failed to appreciate the fact that we can never explore much

of the universe by physically going there.)

Were ground-based astronomers about to be made unnecessary by space probes?

No one knew.

(4) The late 20th Century (1969-2000).

As the dust settled after Apollo 11 — in exactly the era when I was becoming an

amateur astronomer — amateur astronomy was changing directions.

The most obvious fact was that professionals were divided from amateurs not so much by

interests as by technology. They had big telescopes, autoguiders, special photographic

plates, and even digital image sensors (since 1976). Amateurs were making do with equipment

little changed from Victorian times, at least at first.

Amateur telescopes were more affordable.

Amateur telescope making was no longer on the rise because you no longer had to make

a telescope in order to have one. Criterion's RV-6 reflector (6-inch f/8) was a key product;

I got one in 1970; and its $195 price held constant through more than a decade of

high inflation, so it became more affordable every year.

Though not cheap in 1970 dollars, $195 was not more than a teen-ager could earn in a summer,

or (as in my case) prevail on his parents to provide.

Those who did want to make telescopes took a cue from John Dobson of the San Francisco

Sidewalk Astronomers and got a lot more for their money.

The idea was no longer to imitate commercial products, but rather to build a "Dobsonian"

telescope that delivers more performance for less cost.

Medium-sized telescopes became very affordable, and large telescopes (up to 30 inches) became

within amateur reach.

Commercially made Dobsonians appeared on the market and were also bargains.

This enabled serious amateur viewing of faint galaxies.

The other big change in telescopes was the Celestron Schmidt-Cassegrain, made as observatory

instruments in the 1960s and mass-marketed starting around 1973.

What Celestron gave us was portability. It was easy to put your telescope in the car,

head for a remote dark-sky site, and set it up there in a matter of minutes.

Celestrons were also ready for astrophotography to a greater extent than any

earlier design had been.

Amateur astronomy shifted direction.

Many amateurs, including me, advocated "taking the esthetic path" and viewing the sky

as a sightseer, without trying to contribute to science.

This is just what Webb had advocated, and a new magazine sprang up to cater to the new

kind of amateurs. Astronomy began publication in 1973 and was unconnected to

professional astronomy, except for some coverage of research results; most of its content

was aimed at amateur observers. Sky and Telescope was eventually remodeled to

become much more like it.

Fortunately, we spun off another sub-hobby, astrophotography, and it ended up being our

salvation. If we amateurs couldn't make discoveries about galaxies, maybe we could at least contribute

to photographic science. And we did. This was the beginning of the process by which

amateur and professional observing technology came together again.

(5) The early 21st Century (2001-present).

After 2000, amateur and professional capabilities came back together to a remarkable extent.

Our digital image sensors are just like professional ones, just smaller — it's the same

technology. We amateurs have access to a professional library

(ADSABS), a professional deep-sky

database (SIMBAD),

and the same image processing software that professionals use.

Our optics have gotten somewhat better (with inventions such as Celestron's EdgeHD and RASA),

and there's a veritable arms race going on to improve portable equatorial mounts;

we complain about their irregularities, but they are better than anything money

could buy 20 years ago, even professional money.

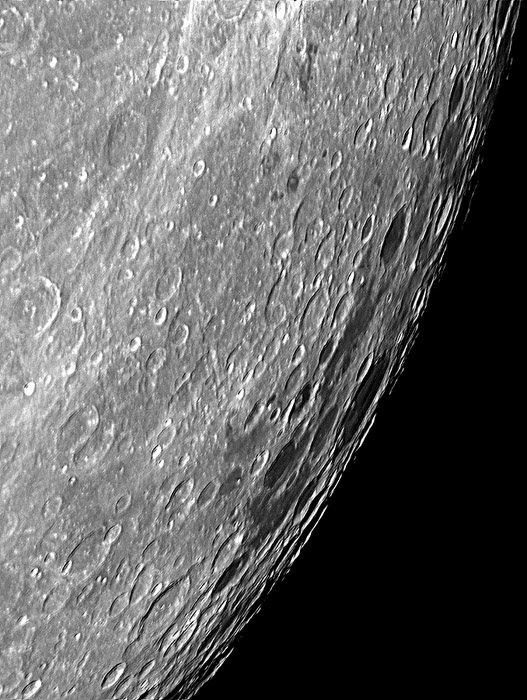

And amateurs with 14-inch telescopes routinely photograph Jupiter as well as any ground-based observatory can.

With small portable telescopes, we've started photographing nebulae that are not well known to science,

including the integrated flux nebulosity (IFN, galactic cirrus).

Meanwhile, professionals are using mass-produced portable instruments for research

projects such as Dragonfly.

Are space probes and orbiting observatories about to make us obsolete?

I don't think so, because although the supply of data from them is massive, the demand still

exceeds the supply. The more people discover, the more they can discover.

And anyhow, someone needs to analyze the data that the observatories gather.

A new but important form of pro-am collaboration is for amateurs to examine or even

process image data from professional observatories. The Internet makes this possible.

I think the time has come for amateurs to turn back to science, not just sightseeing.

There is nothing at all wrong with just enjoying the majesty of the sky and

capturing its beauty photographically, but that is no longer the only thing we can do.

In my opinion, we need to do some organizing.

The only all-purpose organization we have is the BAA.

In the United States,

for planets we have the ALPO, and for variable stars,

the AAVSO, but much needs to be done to

further utilize the new

capabilities that amateurs can offer.

Permanent link to this entry

A mystery about the invention of the transistor

[Updated.]

I've been reading about the invention of the transistor by Bardeen, Brattain, and Shockley

in 1947 (A History of Engineering and Science in the Bell System: Electronics Technology

(1925-1975), ed. F. M. Smits, AT&T, 1985). And there turns out to be a puzzle involved.

As is well-known, the researchers were trying to make a field-effect transistor (a device in which

the conductivity of a semiconductor material is influenced by a nearby electric field).

This had been conceived by others earlier, but not successfully implemented.

(It was in fact invented a while later, and works well, and your PC is made of them.)

But at the time, their field-effect transistor didn't work, and while trying to figure out

why, they stumbled on something useful but mysterious, now known as

the ordinary (bipolar) transistor.

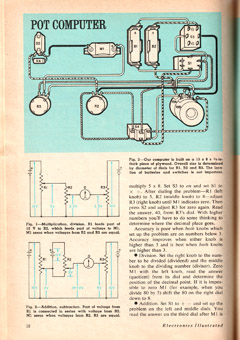

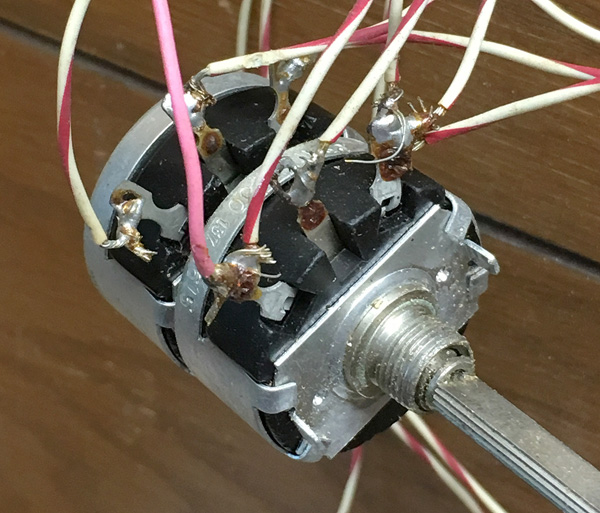

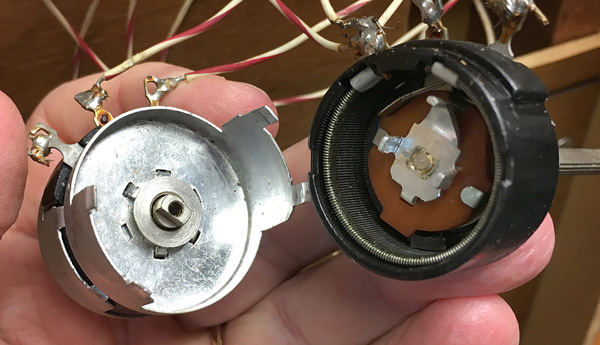

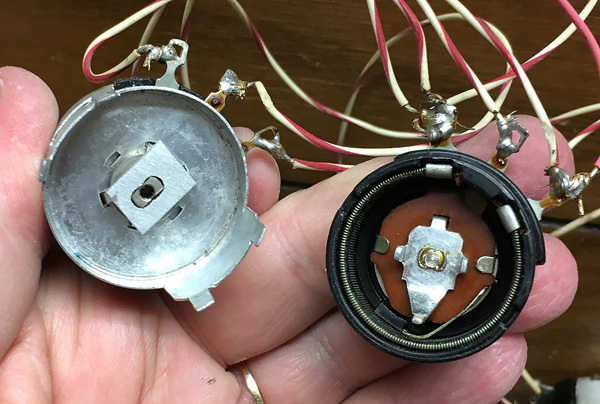

The first transistor was a crystal of N-type germanium with two tiny gold "cat's whiskers"

sticking down onto it, close together, supported by a triangular insulator.

This is like the way diodes were made, but with two cat's whiskers instead of one.

Because the support was triangular, it really did look like the transistor symbol in

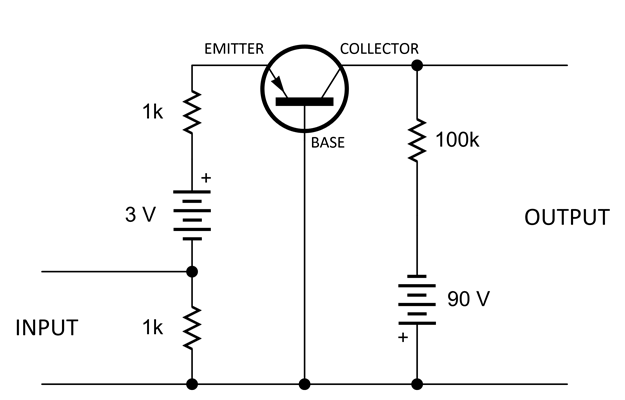

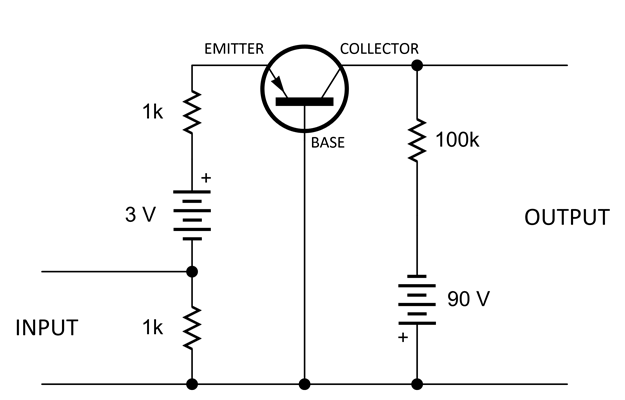

the circuit diagram below.

This is the circuit in Brattain's lab notebook, showing the first transistor amplifier.

It's an audio amplifier, so the input and output are small variations in the voltages at

the indicated points; the idea was to turn small variations into bigger ones, and it worked.

You could build this with modern transistors if you change that 90-volt battery to

about 12 volts, please because 90 volts will burn up most of the transistors we use today.

The block of N-type germanium is called the "base" and the cat's whiskers

are the "emitter" and "collector." The idea is that small changes in emitter current

affect the collector current.

Just to confuse you, because this is a PNP transistor, the emitter emits, and the

collector collects, not electrons but "holes," places where electrons are missing from

the crystal structure. A key point of transistor theory is that holes and electrons

act just alike, but flow in opposite directions.

Modern transistors don't have cat's whiskers. They have areas of P-type germanium

(or rather silicon nowadays) in a crystal of N-type.

Now then. With a modern transistor, when you inject a current into the emitter,

a fixed percentage of it (about 2%) flows into the base, and the rest goes on to

the collector. Power amplification occurs because the collector is supplied from a

much higher voltage than the emitter; the same number of milliamps makes more watts

at a higher voltage. You have a small current controlling a current that is 98% as

big, but powered by a higher voltage. That's an amplifier. You also have voltage

amplification because the output is taken across a much larger resistor than the input;

the (nearly) same number of milliamps makes more volts.

So far so good, but the inventors' original transistor did something more.

The current variation at the collector, in response to variation at the emitter,

was not 98% or so; it was appreciably more than 100% (about 180% in Bell Labs'

first mass-produced transistor).

In technical terms, α > 1.0.

The rest of the current came from the base, of course.

Why this is so seems to have remained somewhat mysterious.

The metal cat's whiskers seem to do something

unusual to the crystal where they touch it, creating a further amplifying effect at the collector.

Shockley's

1950 book (section 4.5) proposes that the metal-to-semiconductor contact

creates not only the P-type collector of the main transistor, but also a further N-type region,

so the whole thing is not PNP but PNPN. He calls this a p-n hook.

This is not unlike two transistors in cascade (and

sharing two electrodes), the second one amplifying the output of the first.

I don't know if this theory is still held.

As far as I can tell, uncertainty persisted right up to the end of the

point-contact-transistor era (which only lasted a couple of years), partly

because no one quite knew all the microscopic effects of the manufacturing process.

Then people lost interest in the question.

Permanent link to this entry

The college admissions scandal

It has been discovered that parents were bribing staff members at Yale, USC, and several other

universities to get their children admitted. The tactic was to tell admissions officials that

the children were being recruited as varsity athletes when they weren't.

There are also reports of unauthorized help on entrance exams, exams taken by impostors,

and the like. I have several thoughts

(and thank several people who discussed this with me on Facebook, especially Avery Andrews

and Al Cave).

(1) If people paid $500,000 bribes and still didn't succeed, this speaks highly of the

usual integrity of the admissions system. Contrary to some chit-chat, this doesn't prove the system

is corrupt and everybody is buying their way in. Quite the contrary! The high price indicates

that successful bribery is uncommon and difficult. If people were regularly taking payments

under the table, they'd make them affordable in order to collect more money.

(2) What people are realizing is that educational fraud can rise to the level of being a crime.

We may need to revise some laws to clarify how educational fraud is handled. Make it an actual

crime, not a civil liability, to falsify educational records, take tests, or even do homework for someone else.

This is analogous to the way laws against forgery and computer hacking have been developed

for clearer handling of acts that would already have been illegal.

(3) You don't want to go to a college that you can't properly get into. You'll be the weakest student there.

Why do people think college admission is just a status symbol? It's a judgment of whether you can pass the courses.

(I know this doesn't apply universally. Some colleges want to have a "happy bottom quarter," a subpopulation

of students who aren't extremely competitive; it reduces the pressure on the others. But I still think

tricking a college is a bad idea. I've seen it turn out badly for people. And of course if you trick them

into giving you a scholarship, you're stealing money.)

(4) As a society, we need to think about whether we really believe athletes deserve better educational

opportunities than the rest of society.

Of course, it is praiseworthy when students work hard at sports (or anything else) to open up opportunities.

The students deserve credit for recognizing, and working hard to utilize, whatever opportunities are made

available to them.

And it is fortunate that athletics is often a path from the ghetto to higher education and success. But is athletic

prowess the only criterion by which people should be selected for this? What about disadvantaged young people

who aren't athletic?

Finally, there is the big-money aspect of college football. The NFL has no farm teams. College football

serves as a farm system for the NFL and brings

in huge amounts of money for the colleges. But the players are required to be amateur (student) athletes

and get none of the money (except indirectly as educational benefits). I wonder if this is the right

relationship between laborers (that's what they players are) and the profits from their work.

I should add that I'm in favor of sports in college. Students enjoy it, and the college is

an organized setting that makes it easy to organize teams and games. But its intention should be more

recreational than it is now.

(5) What to do with the students involved in the scam is unclear. Here are my thoughts.

(a) Students who were consciously involved in the scam should be dealt with severely — expel them, even

revoke degrees. It is hard for a student to be unaware that someone is taking tests for him or giving

him unauthorized help on tests, for example. Such a person is likely to cheat at other things his whole

life long unless stopped.

(b) If a student benefited from the scam but was unaware of it — parents did it behind his back —

the situation is more difficult. I think that if the student is less than halfway through the degree program

(and hence can transfer), his admission should be revoked and he should finish college somewhere else.

This is not punishment, but correction of an erroneous administrative action; the university never intended

to admit that student, and they're just putting him back on the path he should have been on.

(c) If the student, unaware of the scam, is more than halfway through and has decent grades,

or has graduated, then I don't think he should lose anything.

At this point he's earning or has earned the degree.

But his parents, or whoever arranged

the cheating, should have to pay back any financial aid that was

secured as a result of falsifications. (Many private universities award some kind of scholarship

to almost every student, not just the needy.)

(6) What about the other good students who were turned down because scam-assisted students were

admitted ahead of them? There is really no way to make such people whole; it may not even be

possible to identify them with certainty. (Exactly who would have been admitted if there had been

one more slot? You may or may not have records that say. For privacy, applications and rankings

from past years may well have been destroyed. You may not even have made the decision —

you would have looked at more applicants and made more decisions if you had had more slots.)

Fortunately, such people are good at making the most of other opportunities. Maybe they went to other

universities that were, for them, actually better.

If they can be identified, then: (a) if only part way through a degree program elsewhere, they should

be invited to transfer in; (b) they should be given some kind of formal recognition by the university that

wanted to admit them and was scammed out of it, something they can list on their résumés.

Permanent link to this entry

Short notes

I am aghast at the mass shooting at mosques in New Zealand, a country that normally

has little violent crime. As I write this, I don't have enough facts to do more than express

sadness and dismay. But I do have a concern. Over the last few years, the scale of acceptable

behavior and rhetoric

in the United States has shifted; white supremacists have an easier time considering

themselves patriots, much less distant from the political mainstream than they used to be.

To what extent did North American white supremacism, and North Americans' toleration or encouragement

of it, lead to this New Zealand crime?

On a much less serious matter, I am glad to hear lots of sentiment in favor of year-round

Daylight Saving Time. Let's get rid of the twice-yearly changes.

And if the schoolchildren have to wait for the bus in the dark in Michigan, change the time

of school there. Don't command all of us to change our clocks twice a year.

Permanent link to this entry

|