2020

October

29

|

Field of Gamma Cygni (Sadr)

Click on the picture (and, in most browsers, click again) to see it enlarged.

Yet another great picture from Deerlick on October 17 — again one of my best-ever

astronomical images. This is a stack of twenty-one 2-minute exposures with an Askar 200-mm f/4 lens,

Nikon D5500 (H-alpha-modified) camera, and Celestron AVX mount. Why twenty-one? Because I wanted

twenty, took about twenty-five, and kept the ones that were good.

My first deep-sky observation was when, at age 10, I looked at this star field through my

grandfather's 7×35 binoculars at his home in Jenkins County, Georgia, where the skies

were (and still are) very dark and free of city lights. The red nebulae were not visible,

of course, but the clouds of stars fascinated me.

Besides Milky Way star clouds and wisps of red nebulosity, note the jellyfish-shaped Crescent Nebula

near the right edge toward the bottom of the picture, and, at the lower left corner, a star enveloped

in white reflection nebulosity (dust, not ionized gas).

Permanent link to this entry

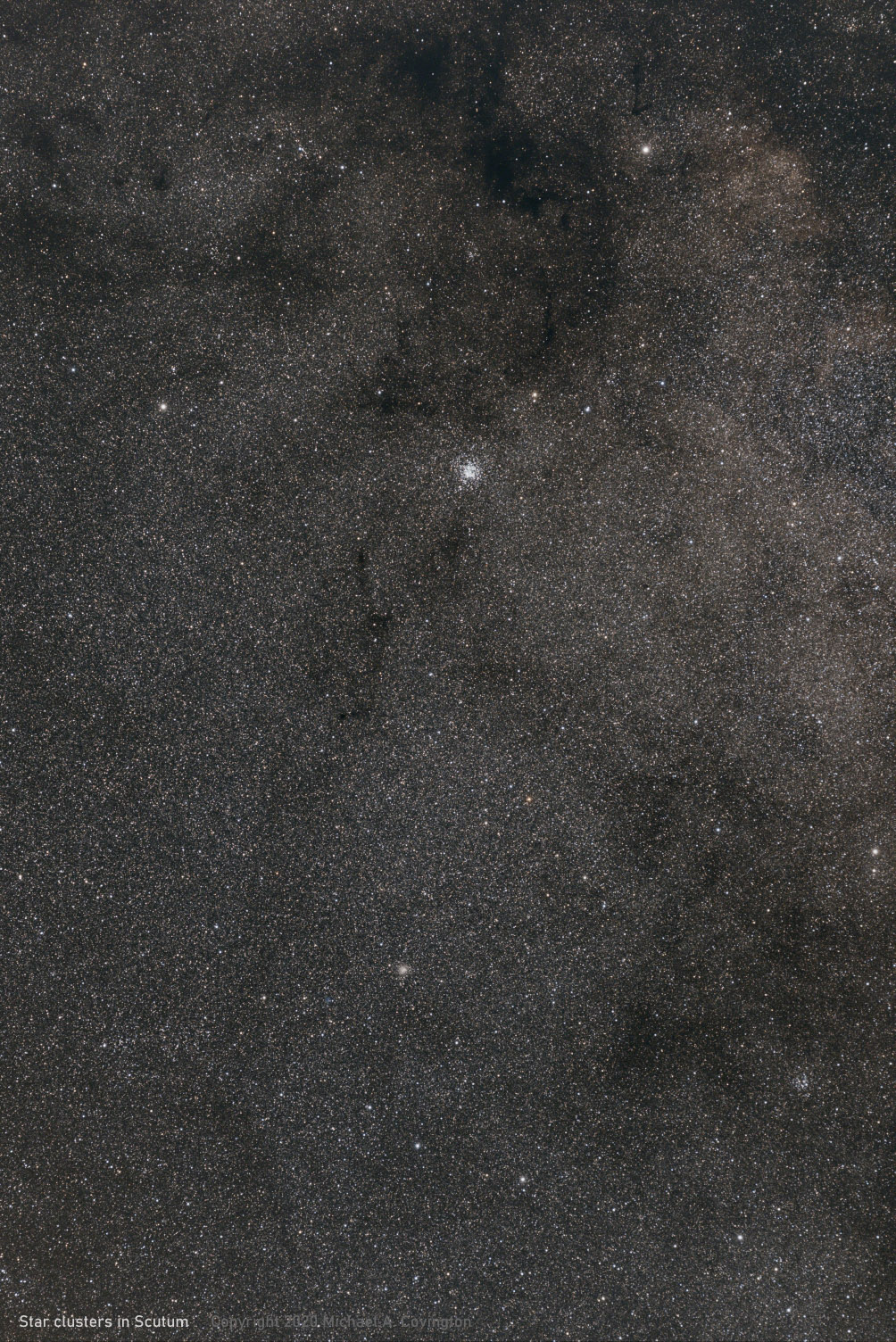

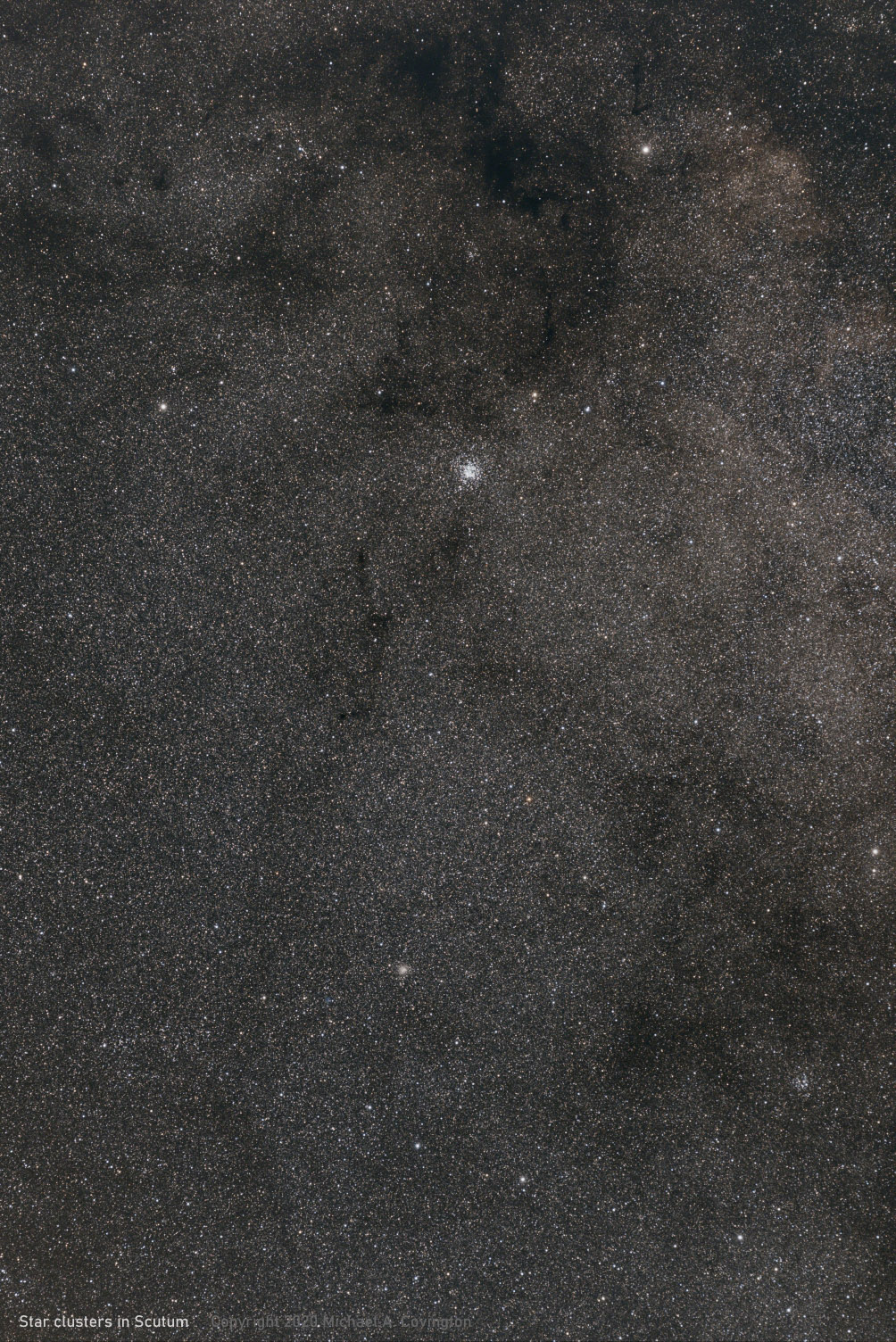

Scutum and M11

One last picture from Deerlick.

This is from a series of exposures that I started but decided not to continue because

the object was getting close to the horizon.

It's the star cloud in the middle of the constellation Scutum and the bright

star cluster M11.

Same equipment as above, but only six exposures stacked.

Permanent link to this entry

|

2020

October

27

|

A walk through Sagittarius

Here's another picture from my trip to Deerlick on October 17.

You're looking at the Swan Nebula (M17) (above center), the

star cloud M24 (dominating the lower half of the picture), and many

other interesting objects, including a dark nebula (dust cloud) in

front of M24.

Click (and then click again) to view this picture enlarged.

Stack of ten 2-minute exposures, Askar 200-mm f/4 lens wide open, Nikon D5500 body

(H-alpha modified), Celestron AVX mount with PEC, no guiding corrections.

Permanent link to this entry

|

2020

October

26

|

My advice to American voters

I have not endorsed a candidate for President and am not going to.

But I do have the following advice for my fellow citizens.

Vote. You have some influence, even if it's tiny. Use it.

Vote for the candidate you think is preferable, even if both are bad, and even if the difference is slight.

This is not a football game. You do not have to pick a side and root for it.

You do not have to tell people how you are going to vote, or display campaign signs. Just vote.

Do not let politics divide you from your friends and neighbors.

Remember that people differ about which of two imperfect candidates is preferable,

and what is actually most likely to happen if they are elected,

not about what is good and what is evil.

Do not believe extreme insults hurled by either side at the other.

If you are eager to believe people are worse than they really are, you are

in a twisted state of mind and are not being fair.

If you cannot vote for the party you normally support,

remember that that doesn't mean you've joined the other party.

Vote for the less bad candidate, but continue standing for what you stand for.

Do not change your mind based on something you hear just before the election,

unless you have gone to great effort to confirm it. Even then, be cautious.

People's characters and policies do not change at the last minute. False information does.

It matters not only who you vote for, but also why you vote that way.

You have a duty to get accurate information and consider it fairly.

You are not a good citizen if you take sides just for the sake of taking sides

or keep yourself willfully ignorant of things you need to know.

The outcome of an election is a distribution of votes, not unanimous election of one candidate.

Accordingly, two people can both be doing their country a service while they vote differently

and speak for different parts of the electorate.

Both supporting and opposing votes express messages that the winner needs to hear.

Vote.

Permanent link to this entry

|

2020

October

24

(Extra)

|

Life in wartime: The third wave is coming

Three non-astronomy topics today. Then the astronomical pictures will resume...

I made a cautious visit to the UGA Science Library today to return the books I checked out

just before the beginning of fall semester. It was quite empty, with maybe half a dozen

students in the whole place, so in spite of pandemic conditions I had no trouble using it.

Except for one thing: reading was hard because my mask was fogging up my glasses. There is

a different kind of mask that doesn't do that, but it's less durable and I don't usually

carry it in my pocket. Anyhow, I was relieved to see that the library is still there and

the university is still at least partly functioning.

I'm one of many who felt that today (Saturday, Oct. 24)

was a good day to get out and run some errands.

The weather was good, the infection rate (particularly locally) has dipped,

and, crucially, we think it's going to go up again, leading to heavier

restrictions (voluntary or compulsory) in the next couple of months

(so let's get things done now).

(I don't advocate throwing caution to the winds,

only catching up on things that would otherwise have to be done later.)

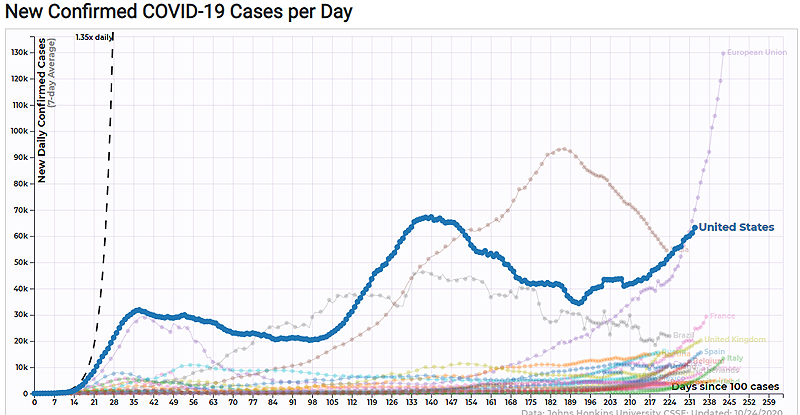

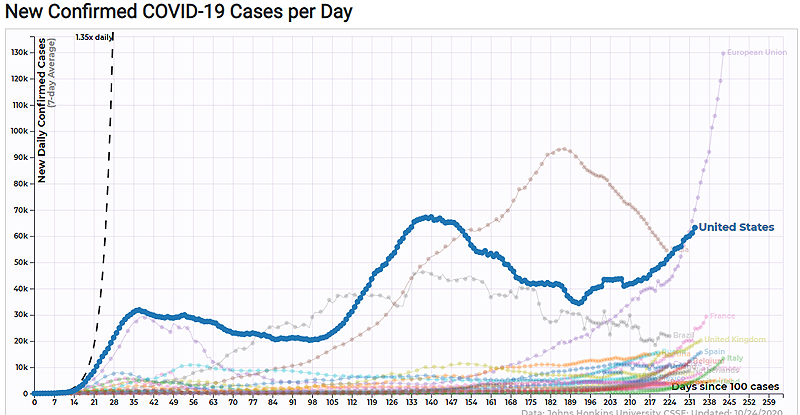

Here's the national graph from 91-DIVOC.com. The Georgia graph has much

less of an upturn at the right, at least so far.

What is life like these days? Schools and universities vacillate between online and

in-person classes, often almost day-to-day. Restaurants are highly restricted, and most

people avoid them. Stores operate normally except that customers are required to wear masks.

I continue to work at home, as does all of FormFree.

Melody and I haven't been to an in-person church service since March; we follow

them online every week.

I keep busy and am not bored at all. The main thing I miss is social interaction with

people who do not immediately need anything from me.

I had a little of that two Wednesdays ago when about a dozen FormFree people met,

cautiously, in the outdoor Biergarten of Creature Comforts Brewery.

I have no taste for anything a brewery makes, but fortunately they also had

gourmet ginger ale. In any case, social life during the pandemic is, quite properly,

almost nil, and I probably won't go to anything similar for several weeks.

And now we're about to have an election. Which brings me to my next topic...

Permanent link to this entry

A wave of national foolishness

I want to say, bluntly, something lots of people have noticed and wondered how to comment on.

I'm saying it here and not on Facebook because I don't want a crowd coming at me with

torches and pitchforks.

The root cause of America's poor handling of the pandemic is that, over the previous ten years

or so, an increasing part of the public had fallen under the spell of political manipulators

who indoctrinated them to ignore reliable sources of information.

"You can't believe the mainstream media," they said. "It's fake news."

Well — if you tell me the mainstream media are ignoring something, you may well be right.

Point me to good sources of information about it. But if you tell me I must not even look at

the mainstream media in order not to be contaminated by "lies" — well, that is as silly as

it looks.

This indoctrination then

made it easy for manipulators to keep maybe 5% of the population from wearing masks

or taking other precautions. They put out the word that if you wear a mask, you're knuckling under

to tyranny. The trouble is, masks and other precautions work only if almost everyone uses them.

A few percent of people promiscuously spreading the virus can easily overcome everyone else's

precautions.

Some people wanted to deny the reality of the pandemic, and sometimes their odd ideas about

the coronavirus reached the level, or almost the level, of psychotic delusion.

The key symptom of a delusion is that the deluded person can't give reasons for the odd

belief, or at least, not reasons that make any sense. They sometimes scream, "Do your research!"

while not sharing any research of their own. The strangest thing that has been yelled at me

(online) was "Qualifications don't matter to me!" In other words, "You have to believe what I say

and ignore the medical experts."

To anyone who wants a fair hearing for a non-mainstream opinion about the coronavirus (or

anything else), I want to say two things:

- It doesn't matter whether your argument convinces me or any other non-expert.

I want to know whether it convinces people who really know the subject — in this case,

medicine.

- What is your goal? Are you trying to contribute to knowledgeable people's discussion,

or are you trying to divide people and split off a group of angry dissenters?

The sooner you express contempt for knowledgeable, honest people, the sooner you convince

me you're doing the second thing and I should have nothing to do with you.

Either I've isolated myself from most of the crackpots by now, or the amount of foolishness is

diminishing. (The appearance of jokes about "Facebook School of Medicine" seems to have been a turning

point.)

I think one reason coronavirus foolishness is diminishing is that by now, most people have

known someone who became seriously ill; that makes it harder to deny.

But it is important for us to know what this wave of national foolishness is, or was.

Permanent link to this entry

AM radio may be going away

AM radio as we know it has been around

for 100 years as of

this coming November 2, but in the United States, it may soon start transitioning,

gradually, to an

all-digital format that ordinary AM radios cannot demodulate (though they will pick it up, as

unintelligible noise).

Fifty years ago, AM had a big advantage over FM: cheaper and simpler equipment on both ends.

Both the radios and the radio stations were less expensive to build

and easier to keep in good working order.

A second advantage is better usability of weak signals. With FM, either the signal is strong

enough for good sound, or you hear a noisy mess. With AM, if you can put up with some static,

you can get useful (though not high-quality) reception of very distant stations.

Try it at night in your car — you'll probably find WLW, WSB, and quite a few other clear-channel

stations audible from many hundreds of miles away.

Before radio stations were so numerous and so effectively networked, this was important.

In some remote areas, it still is, especially in mountainous terrain, because mountains

block FM but not AM, due to the widely different frequencies.

But the sound quality of AM was never as good as FM.

So in the 1970s, when silicon transistors and ICs gave us good low-cost FM radios to listen to,

everyone who wanted to hear music flocked to FM.

Today, fifty years later, music on AM is rare. Instead we get political harangues.

Also, AM is subject to interference, and there are a lot more sources of intereference now

than in 1970 or even 2010. Computers, digital devices of all sorts, fluorescent lights, and

line-operated LEDs make noise on an AM radio. They are less of a problem if you're listening to a

strong local station, but they rule out listening to anything farther away.

As I understand it, digital AM will deliver FM-like sound quality and will be more resistant

to interference, but it will require new-style ("HD radio") receivers.

Unlike with TV, the AM frequency band will not change, and the conversion to digital will not

be compulsory. Clear-channel stations that serve remote areas will probably be the last to

change over.

But I wonder if, in 50 years, the antique radios I'm saving for my grandchildren will have anything

to listen to! Hobbyists may have to build little transmitters to deliver signals to them, rather

the way antique-TV hobbyists now use the RF output of a DVD player.

See also this video.

Permanent link to this entry

|

2020

October

24

|

One of my best astrophotos ever

The most beautiful pictures result when I am trying out equipment, replicating

what I've done before, rather than trying anything scientific.

So it is with the picture of the Andromeda Galaxy featured here.

Last Saturday (October 17) the weather, my circumstances, and the phase of the moon

cooperated, and I was able to go to Deerlick. (Yes, the phase of the moon matters;

moonlight interferes with astrophotography.) So I went, and so did a lot of other

people. This was in fact the biggest crowd I had ever seen there outside of the Peach

State Star Gaze, which was cancelled this year. I suppose this was the unofficial

Peach State Star Gaze. Here's a small part of Grier's Field:

My mission was equipment testing. As you saw the other day, I have just calibrated

the PEC of my AVX mount, and I'm trying out my new

Askar 200-mm f/4 lens

specially designed for astrophotography.

I'm glad to report they both served me well.

Almost every exposure taken with the AVX was tracked perfectly,

and the lens gave images that were sharp all the way to the corners.

In fact the pictures that I took with this lens might be described as map-like;

the stars are points, uniform all over the picture, and no lens peculiarities

are visible.

Here, then, is a stack of twenty-one 2-minute exposures of M31, with the 200-mm lens at f/4

and a Nikon D5500 (H-alpha-modified) at ISO 400. No guiding corrections were needed.

Click to see a larger version, then click again to see it magnified.

All astro images copyright 2020 Michael A. Covington.

The galaxy was easy to see with the unaided eye at Deerlick, and it gave me pause

to think that the light reaching my eyes had been traveling for two million years.

Permanent link to this entry

|

2020

October

21

|

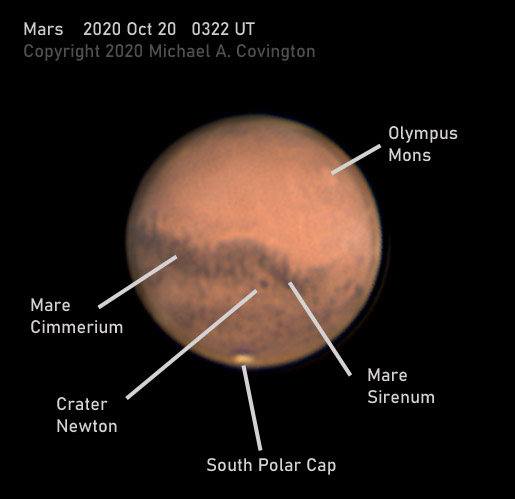

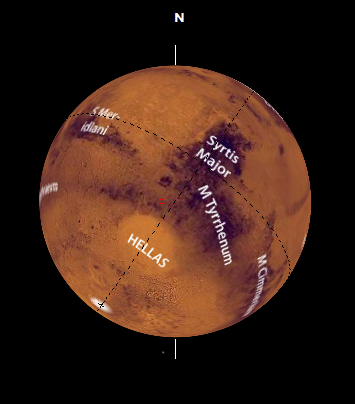

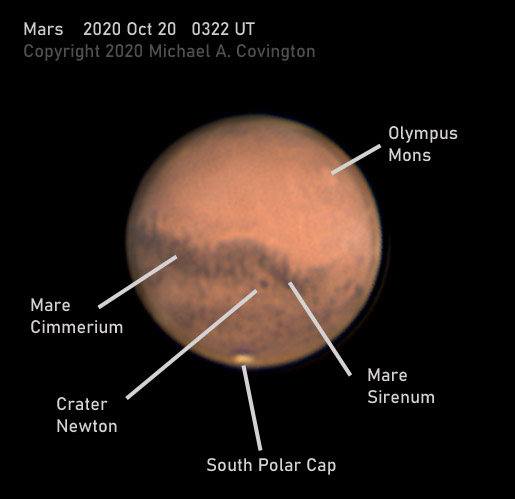

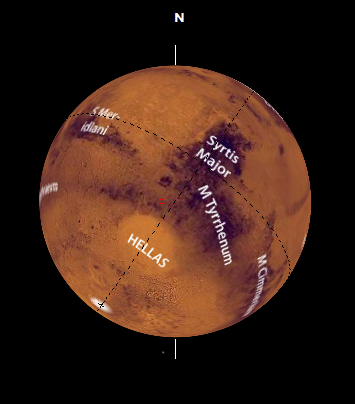

Mars, showing a crater (or maybe quite a few)

A veritable army of amateur astronomers continues taking some of the best

earth-based pictures of Mars ever taken. And the maps available to us have not

kept up. There are detailed topographic maps of mountains, canyons, and craters,

but not very good albedo (brightness) maps, and albdeo is what we see from earth.

The light and dark areas actually shift a little from year to year, since they

are formed by Martian sand blowing around in the wind, influenced but not tightly

controlled by the topography. Most maps show a light area between Mare Sirenum

and Mare Cimmerium; this year's photographs do not.

The dark spots in the best pictures correspond roughly but not exactly to craters.

In particular, the huge crater Newton, at the end of Mare Sirenum, is a reliable dark

spot — it stays full of darker-than-usual material. You can see it in my labeled picture.

Of course, earth-based observers cannot tell that these spots are craters; they are just spots.

See also what I wrote two years ago.

This was taken from my driveway with an 8-inch Celestron EdgeHD telescope, 3× focal extender,

and ASI120MC-S camera. I recorded 16,697 frames of video (which took 5 minutes), then used

software to select the best 20% and stack and sharpen them.

The doubling effect around the edge is not, as I used to think, due to overshoot in a

sharpening algorithm. It is diffraction in the telescope — just like the rings around

a star seen at high power — and I have done only a small amount of processing to weaken it,

since it is optically genuine and I don't want to discard detail.

Permanent link to this entry

|

2020

October

17

|

NGC 7331 and Stephan's Quintet through murk

On the evening of October 15, our skies were not particularly clear, but I needed to

test how well my AVX was tracking the stars (see below), so I took this picture of the

galaxy NGC 7331 and, below it in the picture, the distant galaxy group

Stephan's Quintet.

Not only were the atmospheric conditions poor, I was using an unusually small instrument.

You are looking at the enlarged central portion of a picture taken with a Sharpstar Askar

200-mm f/4 telephoto lens (2 inches aperture) and a Nikon D5500 camera.

Both the lens and my Celestron AVX mount performed well. I took 23 2-minute exposures

and only had to discard three of them because of poor tracking.

Permanent link to this entry

Some notes on periodic-error training

To get a telescope to track the stars perfectly even though it has imperfect gears,

we use (ideally) a guidescope and autoguider, with a camera that constantly watches a star and tells the

mount how to move to re-center it; or (for convenience) periodic-error correction.

That is, the microprocessor in the telescope mount memorizes the irregularies in the gears

and plays back appropriate corrections every time the slowest gear goes around.

The memorized corrections have to come from somewhere, of course.

That's why I use an autoguider to train the periodic-error correction (PEC) even though

I usually don't use it when taking pictures.

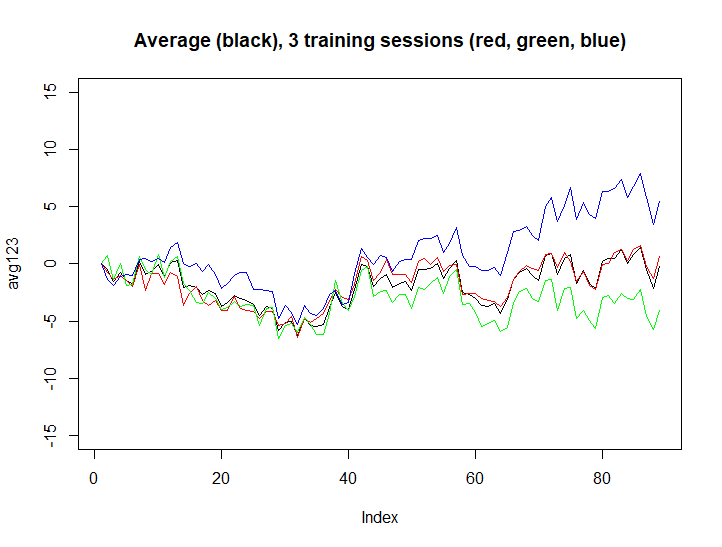

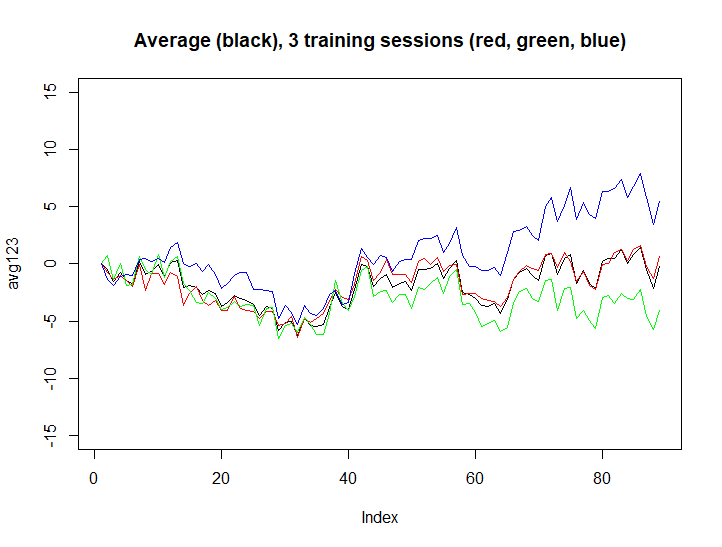

The other night, I re-trained the PEC of my Celestron AVX. Using Celestron's PECtool software,

I was able to train it three times, download the results from each, average them, and upload the

result to the mount. PECtool produces CSV files that I can analyze and plot in R. Here's a handy

graph:

The vertical axis reads in arc-seconds. The horizontal axis uses an arbitrary scale where 0 to 88

spans one rotation of the main gear, taking 10 minutes of sidereal time (about 2 seconds short of

10 ordinary minutes).

If the black curve doesn't look like the mean of the other three, it's because the drift was removed from

all of the curves before averaging them. That is, any overall tilt was removed so that each curve would

start and end at height 0.

Here are some things to notice:

-

The total amount of correction needed is small, on the order of 10 arc-seconds from highest to lowest

point. This is a Celestron AVX mount with a roller-bearing upgrade to the RA axis, and it performs well.

-

Many small jerks in tracking (up and down) are reproducible. This is why PE correction curves should be

averaged but not smoothed. If a small, sharp irregularity is predictable, it should be corrected.

-

Non-reproducible drift (as in the green curve) is common and is on the order of 1 arc-second per minute

of time. This is due to flexure in the mount or the apparatus mounted on it.

Only Celestron offers PECtool. But for mounts of all types there's something even better,

PEMpro. I was using PECtool because I wanted

to compare to earlier PECtool data, and with limited time available, I didn't want to risk

misunderstanding something. But I highly recommend PEMpro for analysis and correction of

periodic error.

There's another way. Some of the best mounts have high-resolution encoders that constantly measure the

rate of rotation very accurately

and correct irregularities on the fly. The less expensive ones are prone to SDE

("sub-divisional error"), which manifests as a slow oscillation at a low amplitude; better ones have

overcome that problem. But even if periodic error is completely eliminated, in many astrophotographic

situations autoguiding will still be necessary because of atmospheric refraction, polar alignment

inaccuracy, and unavoidable flexure in the instruments.

Permanent link to this entry

|

2020

October

13

|

Mars again

Last night I took this image of Mars using a technique that I think will be reproducible —

that is, I've settled on a technique and no longer need to experiment so much.

Using the C8 EdgeHD telescope (8-inch f/10) with a 3× focal extender and an ASI120MC-S

camera, I recorded 5 minutes of video, comprising about 12,000 frames, and aligned and stacked

the best 50% of the frames. Theoretically it would be better to stack the best 20%, but I find

that by using 50%, I get a smooth, grainless image, and no sharpness is lost.

I'm now in a position to get get good images of Mars but am not actually studying Mars — not

tracking the clouds (such as there are) or the effect of wind on the gradually changing surface

features.

One thing I did do is view Mars at 400 power, and it was surprising how much I could see, although I

didn't see as much detail as is visible in the picture. In my earlier years I developed an aversion to

using high magnification, and in fact my usual power with this telescope has been 110× for all

types of objects, occasionally going to 200× for double stars and planets.

I think 200× may have actually been the worst of both worlds, too high to be sharp but too

low to be really big. I viewed double stars at 400× and adjusted the collimation before

looking at Mars.

One factor might be better optics. Thirty years ago, neither telescopes nor eyepieces were as good;

I formed my opinion using conventional Schmidt-Cassegrains and eyepieces where the high-power ones

had very short eye relief. My new eyepiece is an Astro-Tech Paradigm, 5 mm, with about 15 mm

of eye relief, very comfortable to look through.

Other upgrades are in progress.

The AVX mount has recently acquired an iOptron iPolar finder, which makes polar alignment very

quick if I bring along a computer. (If I don't, I can still align it the old way.)

The C8 EdgeHD is going to get a bigger finderscope as soon as the bracket to hold it arrives.

Permanent link to this entry

|

2020

October

11

|

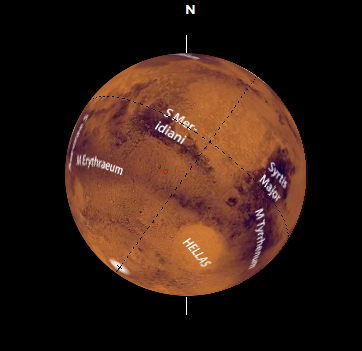

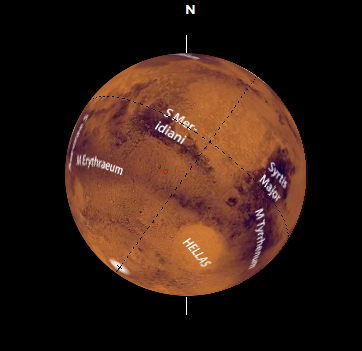

More Mars

A small army of amateur astronomers now have the ability to take better pictures of Mars

than anyone on earth could do fifty years ago. In the following pictures, many of the

spots correspond to craters. There are of course no "canals" — those were an optical

illusion to which not all astronomers were susceptible.

The first of these is a derotated stack of 3 pictures each of which was a stack

of several thousand video frames. The second one is from a large number of video

frames stacked directly. (No derotation is needed for as much as a 5-minute exposure

of Mars because the planet doesn't rotate that fast.) And the third one is of course a map

generated with WinJUPOS.

Particularly in the first picture, you can see the "rind" at the left edge,

a bright band with a dark band next to it.

This is a diffraction effect, and I have chosen not to try very hard to get

rid of it.

To be precise, the bright band is real, though thinner than it looks; it is the sharp sunlit edge

of the planet. The dark band is caused by diffraction; it corresponds to the gap

between the center of a star image and the first diffraction ring.

After the dark band comes another bright band, which is less noticeable and mostly

make the dark band look darker by contrast.

An article by Martin Lewis in this month's Journal of the B.A.A. shows

convincingly that this effect is indeed diffraction, not digital processing, and although

digital sharpening brings it out, it is already present before sharpening is done.

More about that here.

Permanent link to this entry

Two object-oriented-programming topics

I've spent much of the past two weeks reorganizing a large C# program that was originally

written as a series of experiments, which means its design was not consistent; I changed

horses in the middle of several streams, and there was a fair bit of unused code, not to mention

code that was badly organized.

That, plus quickly reading The Pragmatic Programmer,

got me thinking about a couple of points about object-oriented design.

Inheritance vs. Interface vs. Wrapper

If you are thinking of defining a class that inherits another class, be cautious.

Inheritance is no longer quite the glamorous new thing that it used to be.

Already, the designers of C# backed off from it by eschewing multiple

inheritance. The Pragmatic Programmer warns against the "inheritance tax."

The disadvantage of inheritance is that if A inherits B,

then A contains everything that is in B,

including things added to B by other programmers later on,

and any change to B is a change to A.

If A and B are merely supposed to be similar or analogous, and their

internal workings are different,

consider defining an interface instead, and having both A and B implement it.

Consider this especially if you find that in A, you are writing replacements (overrides)

for things inherited from B.

Another alternative is to have A contain an object of class B; that is,

A is a wrapper around B. That is a good way to create a B with a little more

information added.

The situation in which I most often use inheritance is to create a class that slightly extends

a built-in class. For example, I might have a type that inherits List and adds a field saying

where the list came from, or whether it has been validated.

This could also be a wrapper, but direct inheritance can make the derived class

easier to use.

Constructor vs. Factory Method

On the questions of constructors versus factory methods (static methods that create an object),

here are the considerations:

Parameterless constructor

- Is always there, even if you don't define it

- Can be marked private to prevent calling it from elsewhere

- Creates the object in its empty or initialized form

Constructor with parameters

- Recommended when the parameters are data that goes into the object

- Must create the object — cannot fail or bail out

- Cannot perform any computation before calling the base (parameterless) constructor

Factory method

- Recommended when parameters are extraneous to the object, such as a file name to read the object from

- Can return null if the object is not successfully created

- Can return a subclass of the class (e.g., create an object of a different subclass depending

on what is discovered while creating it)

I had had the dim idea (from 1990s object-oriented programming) that factory methods were

considered less desirable. No; in many situations they are just what is needed.

Bear in mind that although I do a titanic amount of computer programming, the main thrust of my work is

not finding the most elegant way to express simple algorithms. It is finding ways to do unusual things.

Elegance often suffers.

Permanent link to this entry

|

2020

October

4

(Extra)

|

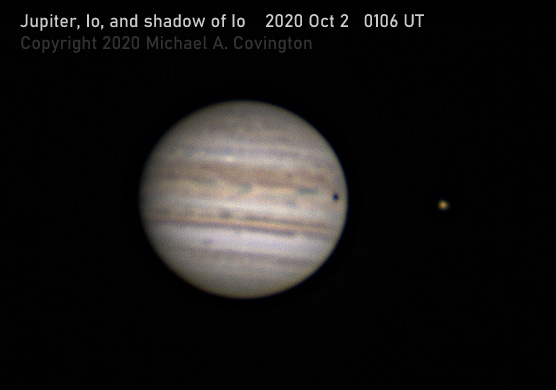

Jupiter, derotated

As you know, I photograph planets by recording video through my telescope and then

aligning and stacking the video frames. Stacking many frames removes the random noise from the

digital sensor. It also turns random atmospheric blurring into a Gaussian blur, which

can be undone by computation. (The sum of random variables is a Gaussian variable;

that's where bell curves come from.) In the process I can discard frames that aren't

very sharp; in fact, I usually keep only the sharpest 50%, or if there are plenty of

frames, the sharpest 25%.

One thing that limits me is that Jupiter rotates so fast that I can't record more than about

two minutes of video without smearing the fine detail.

At least, the theoretical limit is 1 to 2 minutes depending on how much blur is tolerable.

In practice, we do better than that, because the process of stacking the frames makes some

compensation for rotation. It's not perfect, but insofar as features shift about the same

distance in the same direction, stacking can bring them together again. I've never heard anyone

mention this, but it's why we often get surprisingly good results with videos that are

theoretically too long. It only works in the middle of the face of the planet, of course.

But there's a better way. The software package WinJUPOS

actually calculates the rotation of the planet, separates the video frames, de-rotates each one

by transforming the image, and

puts them together again.

The following are my brief notes on how to do this. There are better tutorials elsewhere,

but here I hope to put the whole process in context.

How much did it help? It enabled me to take the best 25% from over 18,000 video frames and get

the smooth, sharp image that you see at the top. Without this kind of high-tech help, I was

already getting quite good images. But I don't mind getting results that are even better!

Taking a long video and derotating was equivalent to doubling the size of my telescope.

Permanent link to this entry

|

2020

October

4

|

Busy times and a plethora of astrophotography

I'm back... All of a sudden, instead of incessant cloudy weather, we've had a

succession of clear nights, and I did astrophotography on four consecutive nights

(all of it lunar and planetary work, from home). Scroll down to see the fruit of

my labors.

And tomorrow (Oct. 5), Melody will get a birthday present — cataract surgery

on her other eye. She will then have better vision than she's had for years if not decades.

We joke that each of us got a new lens for our birthday — mine for my

camera — hers for her eye.

For future readers who want to know what life was like in the plague year, I note that

the coronavirus epidemic is continuing, and much of the country is having a third wave

of it, but in Georgia the infection rate has fallen off nicely.

We still limit trips out of the house and wear

masks when out in public. Now that most people know someone who has been severely ill

or died of coronavirus, we're not encountering so many rabid anti-maskers.

For no good reason, anti-mask sentiment and disregard of the hazard has become associated

with conservative politics, and Republicans proudly doff their masks at party gatherings.

And now President Trump is in the hospital with coronavirus. Proof that choosing not to

believe in the hazard does not protect you from it.

More generally, you can't make problems to away by choosing not to believe in them.

Permanent link to this entry

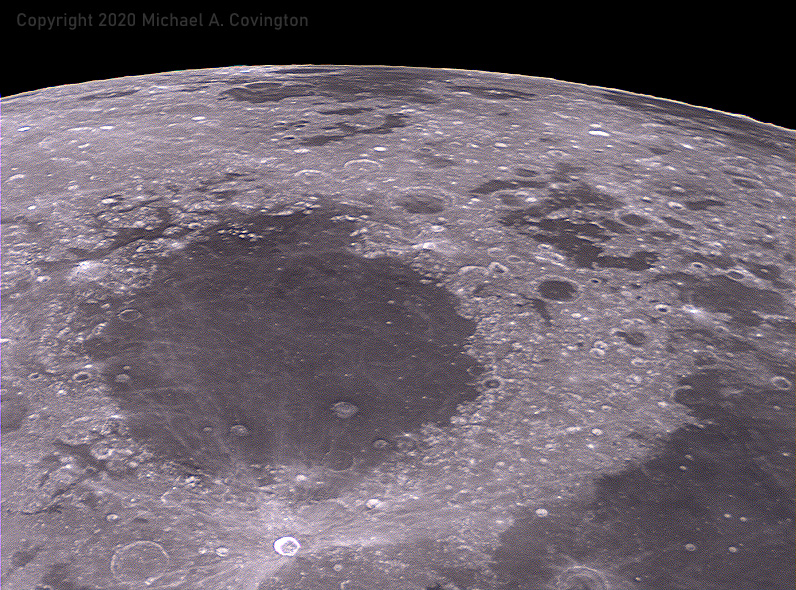

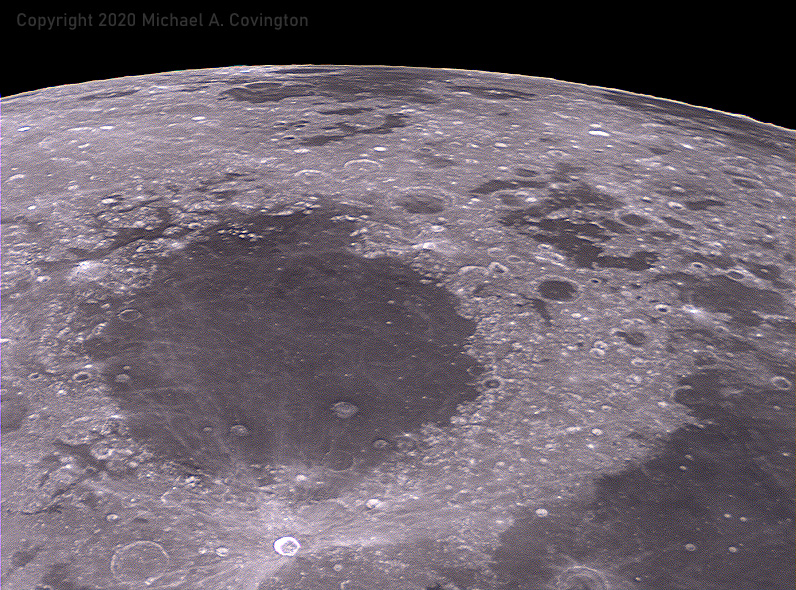

Beyond Mare Crisium

As you know, I've made a minor hobby of photographing the region of Mare Orientale on the moon,

which is only visible when the non-circularity of the moon's orbit tips the region slightly toward us;

it's on the edge of what we can see.

Right now the moon is tipped the other way, and here's what's on the other edge:

The large round dark area is of course Mare Crisium, easy to see as the "eye" of the face

in the moon, which you can see with the unaided eye; it's conspicuous any time the moon is

in the evening sky. Beyond it is the appropriately named Mare Marginis ("sea of the edge").

8-inch telescope, ASI120MC-S camera. Like all the lunar and planetary images here, this is a stack

of thousands of video frames, sharpened by wavelet processing.

Permanent link to this entry

Mars

Mars is now unusually close to the earth and looks like a bright reddish star high in the sky

late at night. (It doesn't look like the moon; it never looks like the moon; a press release in 2003

said Mars would look as big in a 75-power telescope as the moon without a telescope, and someone

copied it leaving out the telescope!)

Because the rotation period of Mars is about 24 hours, we see almost exactly the same side of it

if we observe it at the same time of night on consecutive days. So here are a couple of pictures

and a map. The map is generated with WinJUPOS software and a file I made containing a labeled map

instead of the usual unlabeled one (more about that here).

8-inch telescope (Celestron C8 EdgeHD), 3× focal extender, ASI120MC-S camera.

Stack of thousands of video frames, wavelet-sharpened.

The exciting thing about Mars is that it's the only planet on which we can easily see surface

detail. Venus, Jupiter, Saturn, Uranus, and Neptune are shrouded in thick atmospheres.

Mercury is small and hard to see from earth; the dwarf planets are even harder to see.

The surface features of Mars do change from year to year as dust and sand are blown around by the wind.

Mismatch between the map and the photographs does not mean the map was wrong when it was made.

In the past, writing about history of amateur astronomy, I noted that from about 1900 to the beginning

of the space program, lunar and planetary work was in amateur hands; professionals were focused on

spectroscopy and astrophysics. In Planets and Perception, William Sheehan points out one reason

why this was so. For decades, in the popular and even professional mind, planetary astronomy was

associated with Percival Lowell's speculations about canals and Martians. It was almost like a UFO cult.

Suddenly the space program turned planetary exploration back into serious science.

In later years we've seen a merging of planetary science with geology — which makes sense.

Permanent link to this entry

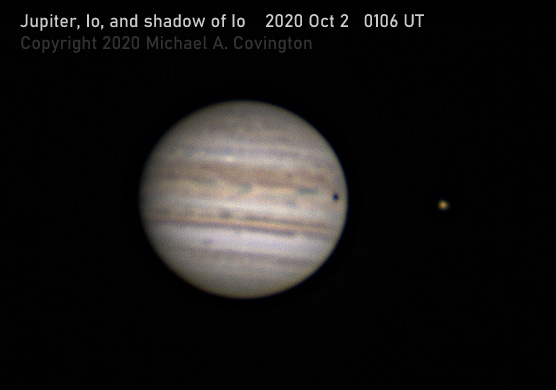

Jupiter

Jupiter is now high in the evening sky right after sunset. You can tell that the sunlight isn't

hitting it straight on; the right edge in the picture is the sunlit side. Jupiter's satellites

often cross in front of the planet or cast their shadows on it. The Great Red Spot is still there,

and still red, but much smaller than when I first saw it half a century ago.

These pictures were also taken with my 8-inch telescope, using stacked video frames.

Here you see some variation in the software settings that I used when processing them.

Just like a film photographer making a print of a negative, a digital astrophotographer

has to make decisions about the contrast and color balance of the finished picture.

The steadiness of the earth's atmosphere, through which I am taking pictures, also

varies from session to session.

Permanent link to this entry

Saturn

Saturn is up there right next to Jupiter; the two planets happen to be almost the same

direction from earth right now. (That means that in a few months, we won't be able to see

either of them, which is a pity; they are similar, and the same astronomers tend to be

interested in both of them.)

The hard part about photographing Saturn is that it's about twice as far from the sun

as Jupiter is, and so only a quarter as brightly lit. I have to take longer exposures,

capturing fewer video frames. But it rotates at the same speed as Jupiter, so with either

planet, I can't expose more than about two minutes without blurring the details on the

surface (of the upper atmosphere) — when there are any details; Saturn is striped,

not spotted.

Same technique: 8-inch telescope, focal extender, and video camera.

Permanent link to this entry

|