|

|

|

2025

December

31

|

And that was 2025...

Tonight Melody and I will see the new year in together, as we have done for every new

year since 1977, although on at least three occasions we had to follow Greenwich Mean Time

and greet the new year at 7 p.m. Eastern, because Melody was in a hospital or rehab facility.

Not this time! We're at home and will mark the new year at midnight Eastern.

This will be the 50th time we have done so, though not the 50th anniversary of the first

(that will be the 51st time, when we enter 2027).

This was a year of great changes.

My work with FormFree has greatly scaled down, and I have not yet built my consulting

practice back up to the desired level with other clients, so if you know someone who wants

to do something innovative with software, take a look at my web page.

I did get three books written and published...

and got cataract surgery, giving me better eyesight than I've had in years...

and the grandchildren are prospering... So it has been a good year for everything

except the consulting practice.

Of course the biggest thing affecting the consulting work is our vast worldwide

spasm and panic about Artificial Intelligence.

Right now, people are at the stage of being disappointed that calamity did NOT come.

Software can do some useful things that it couldn't do previously,

but the resulting unemployment didn't come from software doing people's jobs —

it came from companies cutting back on the workforce that they actually needed,

in order to spend speculatively on AI.

Meanwhile, things I worked with ten or twenty years ago are being announced as great

breakthroughs, as the people who took 2-week short courses on LLMs are suddenly

realizing there is, and always has been, more to AI than that!

The other big change is of course political, as Republicans are finding the freedom

to withdraw their support from Trump, and the Epstein scandal has a lot to do with that.

The political scene changes from week to week.

With that note, we wish you and yours a happy and prosperous

(and more politically and economically stable) 2026!

Permanent link to this entry

|

2025

December

28

|

A moonscape with the crater Delaunay

In the middle of this picture is the double (or "heart-shaped") crater Delaunay,

named after a French astronomer who helped determine the orbit of the moon.

Not much has been written about how it got its odd shape, but one can guess that

the impacting meteorite that formed it split into two just before impact.

Taking advantage of our warm weather, I spent last evening (December 27) taking a few

lunar and planetary astrophotos, as well as checking the performance of equipment

and updating software.

It was 60 F and the air was unusually steady.

I used my Celestron 8 EdgeHD at f/10 and my ToupTek 678 camera.

The picture you see above is the best 75% of 2,953 video frames,

aligned, stacked, wavelet-sharpened, and HDR-adjusted for brightness range.

Permanent link to this entry

Saturn

Saturn's rings are still almost edge-on to us. This is a stack of the best 75%

of 2,675 video frames, wavelet-sharpened.

Permanent link to this entry

Jupiter

By the time Jupiter rose high enough in the sky, later in the night, the air was distinctly

less steady, but because Jupiter is brighter, I was able to take more video frames during the 2 minutes

that I allowed myself — if I had gone longer, Jupiter would have rotated enough to blur some detail,

and the same holds for Saturn. This is a stack of the best 50% of 4,704 video frames, processed

in the same way.

Permanent link to this entry

|

2025

December

27

|

Christmas came

Christmas came yet again. This year, our tree was a tree-shaped metal display

of Christmas ornaments that Melody made a number of years ago. Here are some of them.

Sharon again made lactose-free cheese and then lactose-free lasagna for our

Christmas feast.

The big presents were delivered a few weeks ago — a power wheelchair for Melody to use

at museums and shopping centers, a new laptop for Sharon, and new eyes (or rather

cataract surgery) for me. We did give each other a few smaller things.

In particular, Sharon gave me, purely for its amusement value, a Kodak Charmera,

which is a postage-stamp-sized digital camera that can be carried on a keychain

or charm bracelet.

Here's an example of a picture:

The images are 1.6 megapixels, which with a first-rate

lens could be quite good, but I think the Charmera has a one-element lens. Anyhow,

it does take pictures. It takes a Micro SD card, and I went out and bought one before

finding out that it can store one or two pictures without a memory card,

which may be how I end up using it.

Melody and Sharon together gave me something to go with my new eyes —

a pair of 2.1×42 Galilean binoculars.

A what? Aren't binoculars normally about 7 or 8 power, or more? Who wants 2.1-power

binoculars?

Astronomers, that's who. With their wide field of view, these are fine for scouting out the sky —

recognizing constellations and getting oriented. They don't show a lot more than the unaided eye,

but they do show enough to be worthwhile. They might also be handy at football games for following a larger

area of the field than you could with ordinary binoculars; the trade-off is that you don't get as much

magnification. And people with eye disorders that impair vision might find them very handy.

I thank all my loved ones! Of course the grandchildren in Kentucky got a great hoard of toys,

including some of Cathy's old ones, and had a happy Christmas too.

Permanent link to this entry

|

2025

December

25

|

|

2025

December

24

|

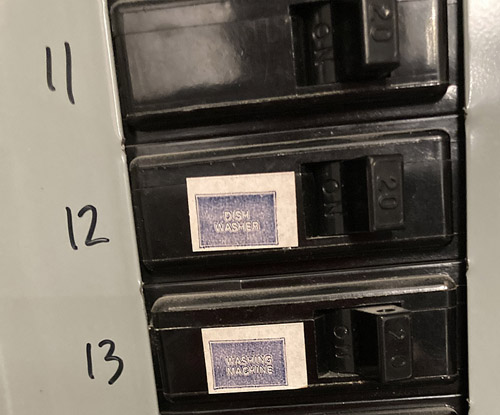

Elec-trickery

Circuit breakers are only supposed to last 20 years, but most of ours turned 51 this year, as did the house.

For some time, one of the lights in our bedroom had flickered occasionally, but we thought

it was a bad LED bulb.

Then I caught two lights flickering in unison — a bad sign.

Then the flickering became frequent, then constant, and I measured a mere 60 volts

(fluctuating) at all the outlets on the circuit.

Suspecting a circuit breaker, I called my electrician

because I don't open breaker panels myself. (They do it wearing gloves; there's too much high voltage,

even when the main breaker is off, and anyhow I'm not experienced in that kind of work.)

There had been arcing at a defective breaker, and the socket in the panel was damaged.

For now, 5 breakers have been replaced, and the circuit that first displayed the problem

has been temporarily combined with another circuit. And we haven't seen any of these

dodgy LED bulbs flickering anywhere in the house! The electricians must have re-seated

nearly all the breakers, improving the connections. I wonder how many flickers that we

attributed to LED bulbs were actually occasional problems in the breaker panel.

(Granting that some LED bulbs are more sensitive to low voltage than others,

so changing the bulb did often fix the flicker.)

We're getting a new panel, with all the remaining circuit breakers replaced. (It costs, but

it's needed to keep the house safe.) Most of the new breakers will be AFCIs (arc-fault circuit

interrupters), which means they detect the fast-fluctuating current that is characteristic of

an arc (spark) and cut it off even if its average value is not high enough to trip a conventional

circuit breaker. That significantly improves fire safety.

Permanent link to this entry

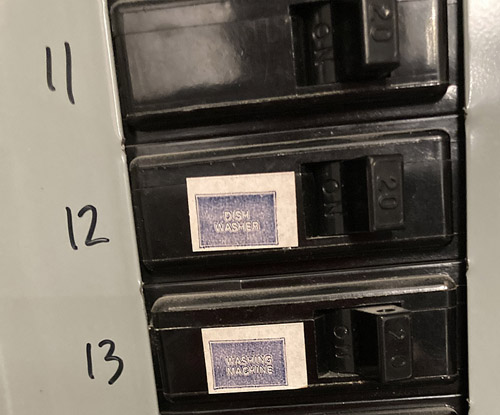

And those labels...

Did you notice the labels on some of the circuit breakers in the picture?

I noticed them when my mother first moved into this house (1977), because they were from

a manufactured set of labels that was sold widely in the 1960s and 1970s and matched

the labels in my Covington grandparents' fuse box.

So every time I looked at our breaker panel, I was reminded of my grandparents.

Some of those labels were removed in yesterday's work; soon they will all be gone.

I remember them as having white lettering on a shiny, solid black background.

As you can see, they have faded a great deal. What kind of printing, used on

labels in the 1960s, would fade like that? They weren't exposed to much light.

Permanent link to this entry

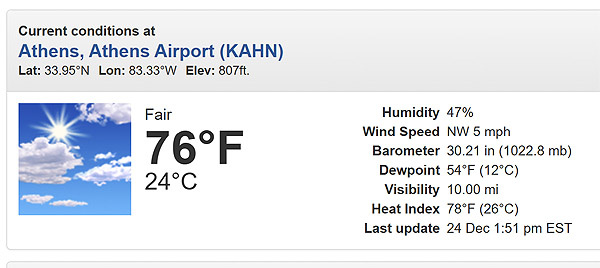

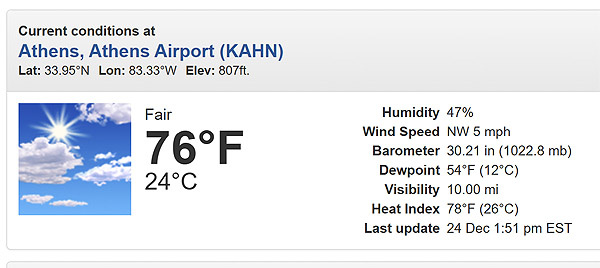

White Christmas? Hah!

This often forebodes ice storms in January.

Merry Christmas to all!

Permanent link to this entry

|

2025

December

23

|

Neuroinclusive

Most refreshing pop psychology term I've heard all day: "Neuroinclusive."

It means "we don't care whether you're 'neurotypical' or 'neurodivergent', we try to accommodate all kinds of people."

I've been getting tired of living in a world where anybody who can think with his mouth closed is apt to be labeled "on the autism spectrum" or "neurodivergent."

Or where you need a psychological label in order to be allowed to have different tastes or preferences.

No... let's look at each other as human, while appreciating that human minds are not all alike.

Permanent link to this entry

|

2025

December

22

|

Can AI write your term paper?

A quick thought that is going to need some refinement...

Now that we're panicking about students using AI to write their papers, maybe we've exposed a gap in education.

Maybe it was a mistake to think that turning in a good paper was proof of knowledge.

Maybe we've miseducated a generation to believe that a paper is the end product.

Maybe we've been allowing a lot of other cheating without knowing it.

Let's pivot toward having students show what they know, in real-time: answer questions in the classroom,

explain what they've written, check someone else's writing both for readability and for accuracy, and so on.

Consider how we teach musical instruments or foreign languages. You have to show what you can do whenever you come to a lesson.

You can't turn in something that was done by someone else.

If you try, you're depriving yourself of much-needed practice, and it's going to show.

Maybe the gap in education actually afflicts not just the students taking flak for AI,

but their teachers, who assumed that a written paper was the product of education.

(Added:)

When I advocate calling on students in class, I don't mean embarrassing them or running a competition they will often lose. If a student is behind, I back up to background material that he is confident of, then show how to move forward from it. I am trying to confirm progress, not create high-stress situations.

Permanent link to this entry

|

2025

December

21

|

Interstellar comet 3I/ATLAS

Last night (Dec. 20) I got my telescope out, taking advantage of clear and

mild (40 F) weather, and solved a lot of the guiding problems that plagued

last month's session (mainly, settings in PHD2 guiding software were wrong).

I used a Celestron 8 EdgeHD, f/7 reducer, Altair 26C camera, and Losmandy

GM811G mount, with NINA and PHD2 software and an iOptron iGuider (probably

inadequate, but it worked).

Here is the object I stayed up until 2 a.m. to get. It is an interstellar comet —

a comet that formed around some other star and was ejected from its planetary system,

eventually reaching ours, which it is now exiting. Its nearest approach to earth was

a couple of days ago — not very close; it never got within 200 million miles of us,

and now it's receding.

Some have speculated that this is an alien spacecraft. Well, if so, it's coated

with ice like a comet, emits gas like a comet, has a tail like a comet, and is

moving in a purely gravitational orbit with no sign of rocket power. The only

unusual thing about it is that it's moving across our solar system, having come

from outside, and destined to leave again without ever orbiting the sun.

This is a stack of thirty 30-second exposures. They were stacked to track the comet,

not the stars, so the stars look like streaks. The comet is a very faint object,

about 15th magnitude, but I got it!

Permanent link to this entry

The faint galaxy M74

I also took aim at the galaxy M74, one of the hardest of the Messier objects to see,

and the last one of them I observed when completing the list (until, much later, I

became convinced that M102 was not the same object as M101, so I viewed it and told

myself again that I had seen all the Messier objects). This is a stack of 150 30-second

exposures.

Permanent link to this entry

Hind's Variable Nebula and an asteroid

One of my observational goals was to check on Hind's Variable Nebula (NGC 1555),

which, as it turns out, is not putting on much of a show right now.

It's illuminated by a star whose brightness fluctuates. This is a stack of 120 30-second

exposures, and I've processed the picture to be a bit light (and grainy, and showing

some false color) to look for the outer reaches of the nebula.

I'll try again next year and see if it has brightened up.

The short streak below the bright star is the asteroid 8415 (unnamed),

which was moving during the hour-long exposure sequence.

Permanent link to this entry

The Crab Nebula (M1)

Finally, here is one of the most interesting objects in all of astrophysics

(look it up) — which I've photographed before, but not this well.

This is a supernova remnant containing, among other things, a pulsar.

Permanent link to this entry

|

2025

December

20

|

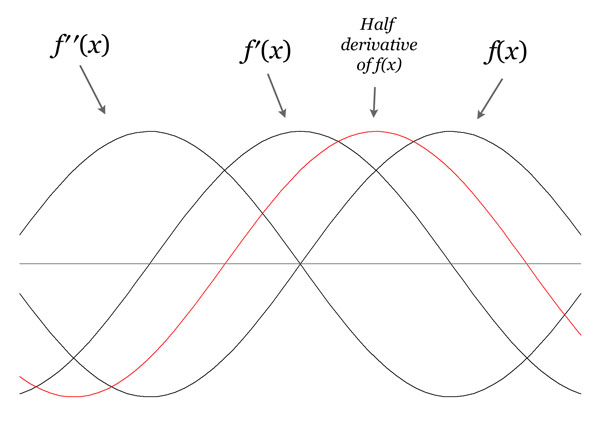

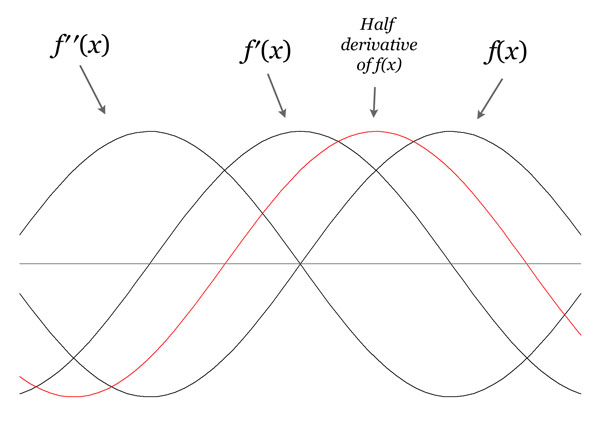

Fractional derivatives (no, I don't mean partial derivatives)

At this advanced age I just came across a curious piece of mathematics — fractional derivatives.

Not partial derivatives (where you differentiate only one argument of a function of many variables).

Fractional derivatives are like first derivative, second derivative, etc., but not whole numbers — for example a one-and-a-half derivative, or a half derivative, or a 32.7th derivative.

What could that mean? If you approach it via theory of limits, like a normal person, you don't get anything. It's nonsense.

But consider that the derivative of a sine curve is the same curve shifted left 90 degrees. The second derivative shifts it another 90 degrees. And so forth.

So if you shift a sine curve left 45 degrees, you've only halfway differentiated it. Do that again, and you get the first derivative. Accordingly, the 45-degree shift is a half derivative. Other fractional derivatives are straightforward.

And since any waveform can be expressed as a sum of sine curves (Fourier transform), and the derivative of a sum is the sum of the derivatives, you can get fractional derivatives of any waveform.

This comes up in electrical engineering — if the phase shift of a capacitor is differentiation, then half as much phase shift through an RC network must be half-differentiation. I had known about phase shifts for a long time but never thought of them as fractional derivatives.

Permanent link to this entry

|

2025

December

18

|

Vibe coding (AI generation of computer programs)

It is now common for computer programs to be generated from queries to large language models.

Here are some thoughts I shared on LinkedIn and Facebook this morning:

Some thoughts about AI code generation ("vibe coding"):

(1) If a lot of coders are about to be unemployed, maybe we were paying too many people to code things very similar to what others have already done. Higher-level programming tools were already needed.

(2) If the entry-level programmers are eliminated, where will the experienced programmers come from?

(3) When compilers first appeared c. 1960, people said coders would be out of work. Then they realized skill was still needed. Mathematicians couldn't just write in FORTRAN the formulas that were on their chalkboards -- the variables wouldn't get initialized in the right order, there would be overflows and underflows, etc.

(4) The big difference between compilers and LLMs is that LLMs are nondeterministic. AI-assisted coding turns into a wacky trial-and-error process that needs to mature.

(5) We might need new, deterministic software tools to assist with AI-assisted coding, to check the output in various ways.

(6) AI-assisted coding discourages or blocks the creation of new programming languages.

Permanent link to this entry

|

2025

December

16

|

A time of rapid political and cultural change

I have been tied up a few days, partly with a lot of scheduled activities,

and partly with a gastrointestinal infection. Today I'm back...

This month, America is undergoing rapid political and cultural change, revolving

around two things: Trump is falling from favor and the infatuation with

generative AI is coming to a sudden end. Paradoxically, the second has caused

interest in my consulting services to go up, not down, although

I'm still looking for projects

(innovative software of any kind, whether or not you call it AI).

Taking the second point first, I've written an

article in AskWoody about the current paradigm shift in artificial intelligence.

(Click through and read it.) Over two or three years we've

seen the popular impression go through several stages:

- “They’ve fed all of human knowledge into a huge machine, and it’s smarter than us! The singularity is coming!”

- “Well, large language models aren’t perfectly reliable, but surely they’ll get better!” (People noticed LLM hallucinations and inaccuracy.)

- “Let’s use extensive post-training to make LLMs more accurate.” (This inaugurated a massive post-training push that is still going on.)

- “Let’s bolt conventional software onto LLMs to make them more accurate.” (Thus LLMs were given access to conventional software as tools.)

- “Let’s insert LLMs into conventional software, just in the places where they will help!”

Along the way, all the talk about AGI ("artificial general intelligence") and "the singularity" went quiet.

People who answer a question in a forum with "I asked ChatGPT" get laughed at; ChatGPT doesn't know things; it only

guesses or summarizes what it has been given; it can help you find knowledge, but it is not itself an authority.

Knowledge takes time to trickle down, so I'm still hearing from people who are stage 1 while I'm actually working at stage 5.

I'm not saying generative AI is useless or washed up. No; it's very powerful for the things it's good at, which include

paraphrasing and summarizing texts and generating familiar types of pictures and computer programs.

In fact, it is revolutionizing routine computer programming because it's so easy to get a rough draft of your

program generated immediately. Then you have to check it, a step often neglected.

But the continued move toward enormous, commercial LLMs may be financially impossible anyway.

The current ones are losing money at a huge rate. They won't earn back their investment on data centers before it's time to

build newer, better data centers. (That's the big difference between this and the 19th-century railroad boom;

once built, railroads could be operated for decades with little additional construction.) Meanwhile, free LLMs,

including a big one from the Swiss government, are available for people to run on their own PCs without paying

any money to the big companies. Something's going to come crashing down.

Now what about politics? Crucially, Trump is losing support and the hard-right wing of the Republican Party

is pulling away from him (led by the flamboyant Marjorie Taylor Greene).

This is partly for political reasons, and partly because Trump's state of health is causing alarm.

He has fallen asleep in most of the past week's press conferences.

He has given strange, rambling, confabulatory speeches.

He twice responded to the tragic murder of filmmaker Rob Reiner with something that sounded like endorsement,

taking it to be a political assassination in support of himself (Trump).

Almost all the numbers he ever gives are false — and we can't tell if they're intended to deceive

or if he's just confused.

My big question for his supporters is: If he's incapacitated, why do you still want him in office?

Shouldn't you want a competent right-wing president? Or do you want a disabled president so

someone else can pull all the strings?

Never mind, of course, the fast-emerging Epstein sex-trafficking scandal, which is what drove Ms. Greene away

(and there's an organized group of Epstein victims that she is now supporting; they are out for Trump's hide).

Bear in mind that we don't have any proof that Trump committed crimes, but there are accusations in

sworn testimony, and his attempts to prevent the release of

the evidence have been vigorous.

The end of the Trump era is now bringing to a head another issue, the public image of Christians, especially

doctrinally conservative Christians such as myself.

Much of the public equates conservative Christianity with Trumpism. And that's a problem.

The sincere Christians that I know never supported Trump very strongly. Some opposed him all along

(like Russell Moore and myself); some tolerated him very reluctantly as the lesser of two evils.

The latter should be ready to drop him like a hot potato when he is no longer the lesser evil.

A weaker type of Christians, or semi-Christian churchgoers, however, jumped right on Trump's bandwagon,

equating it with God's.

It is much easier to support a politician than to get close to God.

And they have a problem. Matthew 24:24 may apply.

Political enthusiasm will not get you to Heaven. It may get you farther away.

In retrospect, some of these people are going to look just as silly as the people (they exist)

who seem to think that rooting for the right football team is part of the via salvationis.

More importantly, Christendom as a whole has a problem.

There is actually an upsurge of interest in Christ among younger adults, but:

- People think, mistakenly, that if they can't be Trumpists they won't be welcome in church.

- People think, mistakenly, that only some type of "liberal" Christians are non-Trumpists; that is, they think

Christians who don't follow Trump also don't require clear commitment to Christ or to historic

Christian doctrine and morality.

- People think, mistakenly, that if you're not tangled up in the political Right you must be

tangled up in the political Left (this is the secular version of the previous point).

All of these facts seriously undermine our ability to get out the Christian message.

How quickly can we overcome them?

Permanent link to this entry

|

2025

December

4

|

Refilling a snow globe

The photography here isn't up to my usual standard, but I wanted to show you something useful.

Before I repaired it, this snow globe had lost about 1/3 of its water. Now it has only a small bubble

at the top, which is probably desirable — if it had no bubble, there would not be much to absorb

pressure from thermal expansion.

To work on it, I put it upside down on top of a bowl and a piece of non-skid shelf liner:

The black part, containing the music box, is held in with peelable glue and pries off easily.

(Arrows show where to pry.)

That reveals how the globe is sealed: with a big rubber stopper that is sealed in with glue.

My next move was to drill at 1-mm hole near the edge of the stopper, tilt the globe to put that

hole as high up as possible, and use one of Melody's insulin syringes to remove air and inject

distilled water:

I recommend that the very last step should be withdrawing a bit of air or water to leave

negative pressure.

Then I got it good and dry and sealed it with Loctite Shoe Glue (a useful substance that dries flexible),

let the glue dry overnight, and finally set the globe down on a paper towel to check for leaks.

In so doing, I found the original slow leak that had caused it to lose its water

(one drop per day or so, not noticed from the outside) and sealed that.

Another 24-hour test, and I glued the music box back in place and declared it fixed.

Permanent link to this entry

|

2025

December

3

|

Training AI on one person's expertise

My Cambridge friend Mike Knee asks: LLMs are trained on all available text, the whole Internet. Could an AI system be trained on one person or a few people's expertise so that it is reliable on one subject?

Answer: Yes, but then it wouldn't be an LLM. What you are describing is a knowledge-engineered expert system and was one of the dominant kinds of AI when I first got into it. Expert systems are very useful for specific purposes but don't act very humanlike (don't carry on conversations) — they require formatted input and output. Small ones are commonly built into the control systems of machines nowadays. Training large ones tends to be a formidable task, hence the move to machine learning (automatic training).

Knowledge-engineered rule-based systems live on. I built one over the past several years for the purpose of credit scoring (RIKI). It's vital to control what the score is based on, so machine learning is not appropriate — it would learn biases and prejudices that we can't allow.

What you may have in mind is training an LLM on a small set of reliable texts rather than the whole Internet. In that case it wouldn't learn enough English. An LLM is a model, not of knowledge, but of how words are used in context, and it needs billions of words to learn English vocabulary, syntax, and discourse structure, because it learns inefficiently, with no preconceptions about how human language works.

The reason LLMs give false (hallucinatory) output is not just inaccuracies in their training matter. More importantly, it's because they paraphrase texts in ways that are not truth-preserving. Fundamentally, all they are doing is using words in common ways. They are not checking their utterances against reality.

Improvements in commercial LLMs recently have come from (1) fine-tuning (post-training) to make accurate responses more likely (still not guaranteed), and (2) connecting LLMs to other kinds of software and knowledge bases to answer specific kinds of questions (RAG, MCP, etc.).

I think there is a bright future for using LLMs as the user interface to more rigorous knowledge-based software, and also using LLMs to collect material for training and testing knowledge-based systems. I do not think "consciousness will emerge" in LLMs or that they will replace all other software.

Permanent link to this entry

|

| |

|

|

|

This is a private web page,

not hosted or sponsored by the University of Georgia.

Copyright 2025 Michael A. Covington.

Caching by search engines is permitted.

To go to the latest entry every day, bookmark

https://www.covingtoninnovations.com/michael/blog/Default.asp

and if you get the previous month, tell your browser to refresh.

Portrait at top of page by Sharon Covington.

This web site has never collected personal information

and is not affected by GDPR.

Google Ads may use cookies to manage the rotation of ads,

but those cookies are not made available to Covington Innovations.

No personal information is collected or stored by Covington Innovations, and never has been.

This web site is based and served entirely in the United States.

In compliance with U.S. FTC guidelines,

I am glad to point out that unless explicitly

indicated, I do not receive substantial payments, free merchandise, or other remuneration

for reviewing or mentioning products on this web site.

Any remuneration valued at more than about $10 will always be mentioned here,

and in any case my writing about products and dealers is always truthful.

Reviewed

products are usually things I purchased for my own use, or occasionally items

lent to me briefly by manufacturers and described as such.

I am no longer an Amazon Associate, and links to Amazon

no longer pay me a commission for purchases,

even if they still have my code in them.

|

|